Creating a Reusable Infrastructure-as-Code Test Harness using ‘Terraform Test’

Cloud Services come in all shapes and sizes. They have a ton of features and configuration options. Infrastructure-as-Code helps put method to the madness but why don’t more Azure Product Teams ship with the most common usage scenarios with working examples?

It seems there is a pattern where they might give you one example — in Bicep — which after 6 months will atrophy and die leaving your customers fumbling around on the internet trying to piece together a working version of your service using Terraform — or perhaps Bicep, I guess some people do use Bicep?

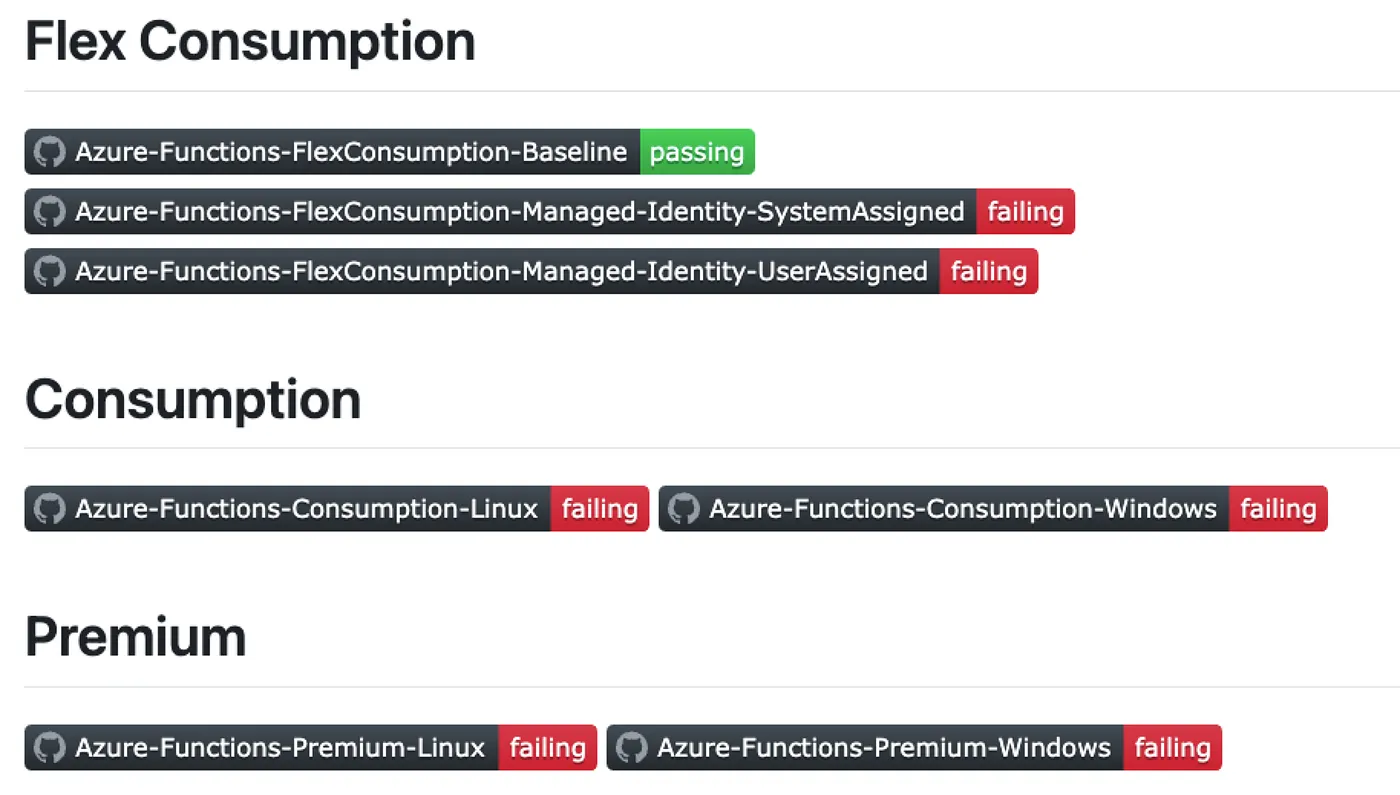

This article documents what broke, how I systematically diagnosed the failures using a Terraform-based test harness, and how I built a reproducible, modular testing pattern for Azure Functions while uncovering a key limitation when attempting to Terraform Azure Functions on a Flex Consumption hosting plan.

You can find the code demonstrating my Terraform Test Harness here:

https://github.com/markti/automation-tests-azure-functions

Automation Testing Strategy: Building a Reusable Terraform Test Harness

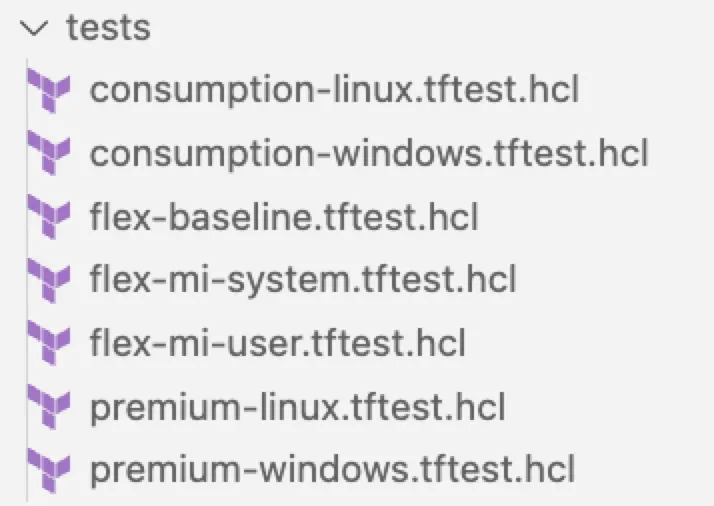

To isolate and verify each scenario systematically, I built a Terraform test suite to validate not just provisioning a Function App resource to Azure but actually deploying my .NET Azure Function application code and verifying it is working. I did this across the multitude of hosting option:

- Consumption Plan (Linux, Windows)

- Premium Plan (Linux, Windows)

- Flex Consumption (Storage Account Connection String, System-Assigned Managed Identity, User-Assigned Managed Identity)

Doing this allowed me to confirm that Flex Consumption currently fails with Managed Identity under Terraform, while still validating other hosting models within the same structured testing workflow. I suspect, if I can get the quota issues resolved for Consumption and Premium, I’ll be able to get those test scenarios to pass as well.

Consistent Variable Structure for Reliability

A core principle for maintainable, reusable Terraform testing is consistent variable structure across all modules and test scenarios. Instead of scattering variable names across modules, I standardized inputs across tests:

variable "application_name" { type = string }

variable "environment_name" { type = string }

variable "location" { type = string }

These serve as base identifiers for all resources:

- application_name: ties resources to a specific workload under test.

- environment_name: indicates the environment context (test, dev, staging).

- location: specifies the Azure region, easily swapped per testing needs.

This may seem like pretty basic but when you run a huge suite of tests that provision stuff to an Azure Subscription you need to make sure there aren’t conflicts and you need a way to have traceability to a specific test’s deployment in case you need to do diagnostics.

Provider Configuration for Stability

All tests use a minimal but consistent azurerm provider configuration:

provider "azurerm" {

features {}

resource_provider_registrations = "none"

}

Setting resource_provider_registrations = “none” avoids implicit provider registrations, reducing runtime noise and improving speed, especially in newly provisioned Azure Subscriptions. I’ve been bitten by an Azure Resource Provider that just won’t finish registering in some obscure Azure Region that I’m not ever gonna use which has crippled my workflow. Not gonna do that anymore.

Variable Assignment for Test Runs

I have no shame. In the tftest.hcl file I just hard code these configuration settings. It keeps it readable and easy to change.

variables {

application_name = "fn-tf-tests"

environment_name = "test"

location = "westus3"

deployment_package_path = "./dotnet-deployment.zip"

}

The application_name / environment_name pattern is not a new one but it ensures clarity in logs and the Azure Portal while providing regional flexibility and easy CI/CD integration — my GitHub Actions stay happy because they never stomp on each others toes!

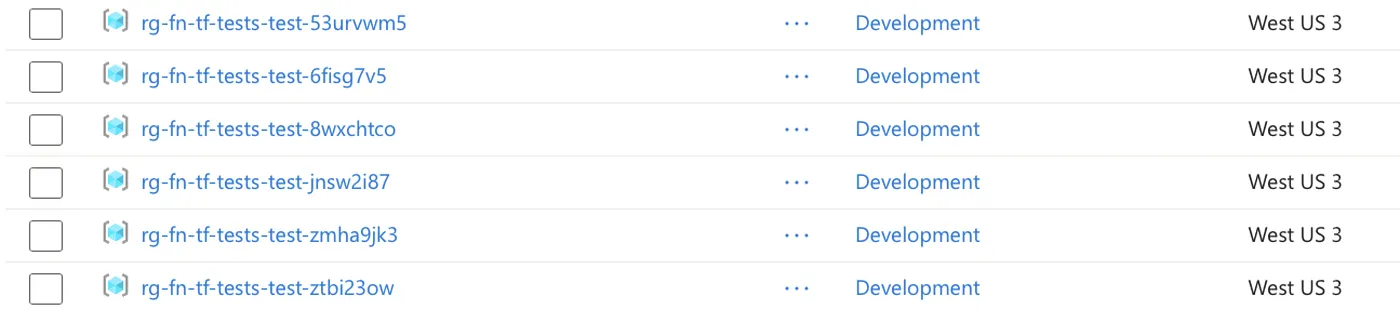

Resource Group Isolation with Random Suffixes

To avoid naming collisions during repeated test runs, I append random_string suffixes to resource names:

resource "azurerm_resource_group" "main" {

name = "rg-${var.application_name}-${var.environment_name}-${random_string.suffix.result}"

location = var.location

}

This guarantees:

- Parallel test runs without conflicts

- Clean teardown after each test

- Predictable, human-readable naming for debugging in the Azure Portal

Flex Consumption Deployment: Why It’s Different

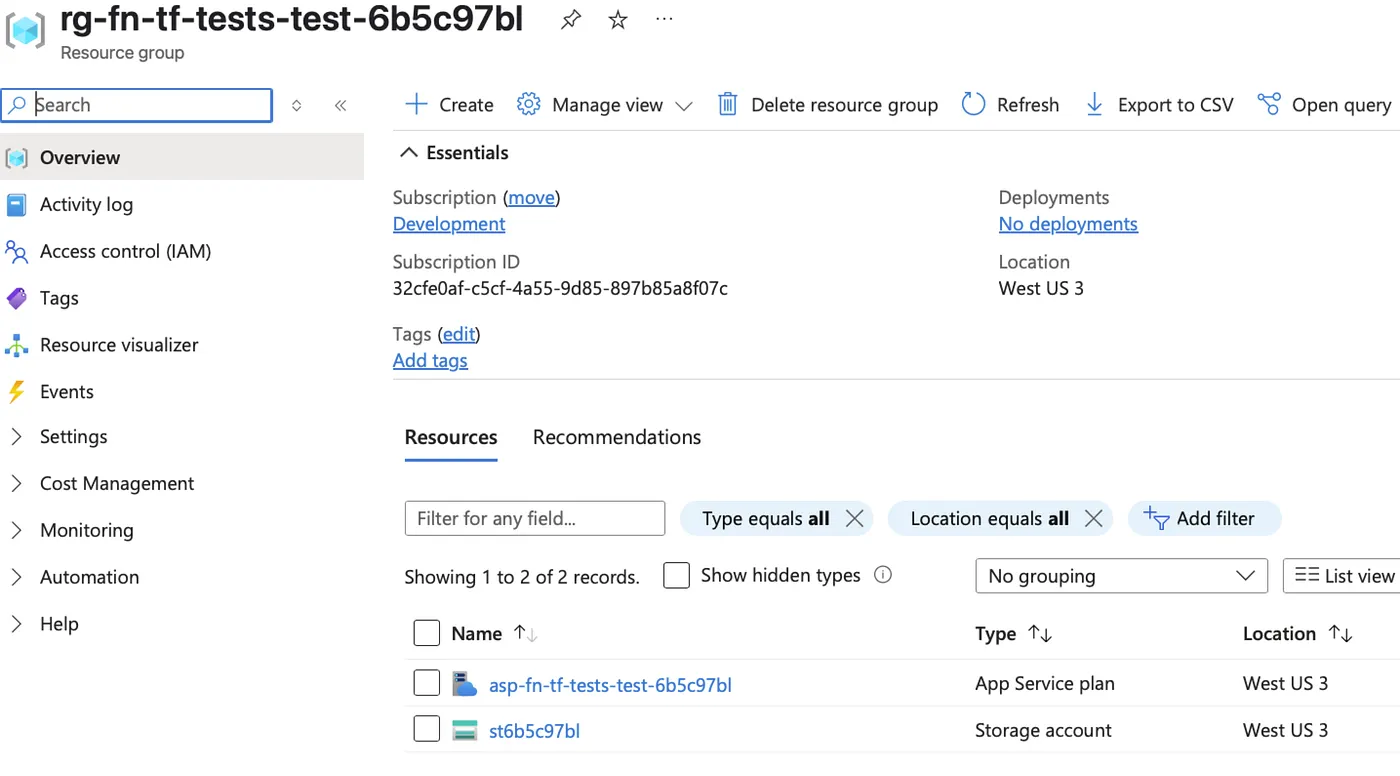

Unlike Consumption or Premium plans, Flex Consumption requires explicitly providing a storage container for deployment and does not manage storage automatically. With Terraform, the only currently working option for Flex Consumption is using a Storage Account Connection String.

resource "azurerm_storage_account" "function" {

name = "st${random_string.suffix.result}"

resource_group_name = azurerm_resource_group.main.name

location = azurerm_resource_group.main.location

account_tier = "Standard"

account_replication_type = "LRS"

}

This explicit container is then referenced in your azurerm_function_app_flex_consumption resource using:

- storage_container_type = “blobContainer”

- storage_container_endpoint pointing to the blob container URL

- storage_authentication_type = “StorageAccountConnectionString”

- storage_access_key from the storage account’s primary key

resource "azurerm_storage_container" "flex" {

name = "flex"

storage_account_id = azurerm_storage_account.function.id

container_access_type = "private"

}

App Service Plan for Flex Consumption

Flex Consumption requires explicitly defining an App Service Plan with SKU FC1 .

resource "azurerm_service_plan" "main" {

name = "asp-${var.application_name}-${var.environment_name}-${random_string.suffix.result}"

resource_group_name = azurerm_resource_group.main.name

location = azurerm_resource_group.main.location

os_type = "Linux"

sku_name = "FC1"

}

Provisioning the Azure Function App

This deployment follows a consistent naming strategy for traceability and explicitly links the App Service Plan to the Azure Function App.

resource "azurerm_function_app_flex_consumption" "main" {

name = "func-dotnet-${var.application_name}-${var.environment_name}-${random_string.suffix.result}"

resource_group_name = azurerm_resource_group.main.name

location = azurerm_resource_group.main.location

service_plan_id = azurerm_service_plan.main.id

storage_container_type = "blobContainer"

storage_container_endpoint = "${azurerm_storage_account.function.primary_blob_endpoint}${azurerm_storage_container.flex.name}"

storage_authentication_type = "StorageAccountConnectionString"

storage_access_key = azurerm_storage_account.function.primary_access_key

runtime_name = "dotnet-isolated"

runtime_version = "8.0"

maximum_instance_count = 50

instance_memory_in_mb = 2048

site_config {}

}

It uses connection string authentication, which is currently the only working option for Flex Consumption, while configuring the app to run dotnet-isolated on .NET 8 to align with modern workload requirements.

Conclusion

Azure Functions Flex Consumption, combined with Terraform, is not ready if you require Managed Identity (I do) today. This is a frustrating experience for automation engineers. One where you can’t help but feel there is a bait and switch. This situation can be avoided but it requires product teams to take automation scenarios seriously. Product Teams should ship features only with verified, end-to-end automation scenarios. It doesn’t help if I can automate something that is broken. Supporting something halfway is about as useful as not supporting it at all.