Designing Scalable Infrastructure-as-Code with Terraform Root Modules

As teams grow and services evolve, organizing infrastructure as code (IaC) in a way that scales across multiple teams becomes essential. A well-designed Terraform root module structure is key to managing this complexity. The foundation lies in componentizing infrastructure into individual root modules — each with its own lifecycle: terraform plan, terraform apply, terraform destroy, and a separate Terraform state file.

These root modules must also be environment-aware, allowing the same infrastructure to be deployed for different environments (e.g., DEV, TEST, PROD) to support parallel development and deployment of application code. To explore this design pattern in practice, let’s look at Azure Functions as a concrete example.

Understanding Azure Functions in the IaC Context

Azure Functions are serverless compute services that host individual pieces of application code. Each Function Appresource in Azure represents a singular application codebase. This is an important distinction — unlike virtual machines (VMs), which could run various workloads, Function Apps are built for isolation and single-purpose deployments. If you have components written in multiple languages like C#, Python, or Go, you need separate Function Apps for each.

This design pattern isn’t a limitation — it’s a best practice. Long gone are the days of dumping workloads onto monolithic VMs. With containers and Kubernetes, we’ve moved toward compartmentalized workloads with orchestration and resiliency at scale.

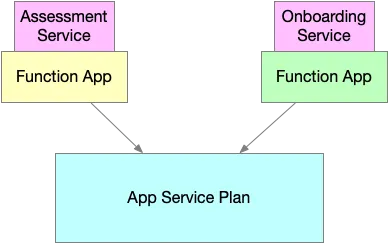

The Function App in Azure is akin to a pod in Kubernetes, serving as the logical container for a single codebase. But just as a pod needs a node to run on, a Function App needs an App Service Plan. The App Service Plan is similar to a Kubernetes cluster; it manages a fleet of compute capacity that the Function Apps use. Depending on its configuration, it might represent a fixed number of nodes or abstract, scalable throughput. Without it, the Function App cannot exist.

This dependency creates a clear infrastructure layer: the App Service Plan must be provisioned before any Function App. Once this dependency is identified, we see the formation of a tiered infrastructure model where multiple workloads can share foundational resources.

Real-World Application: Microservices with Independent Teams

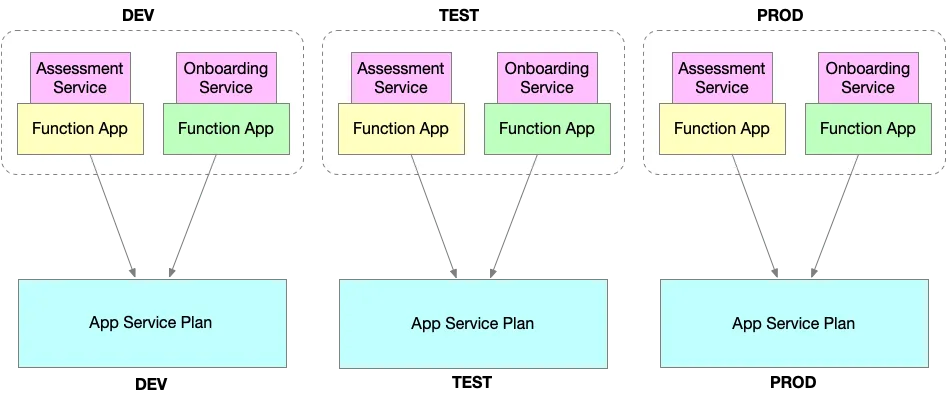

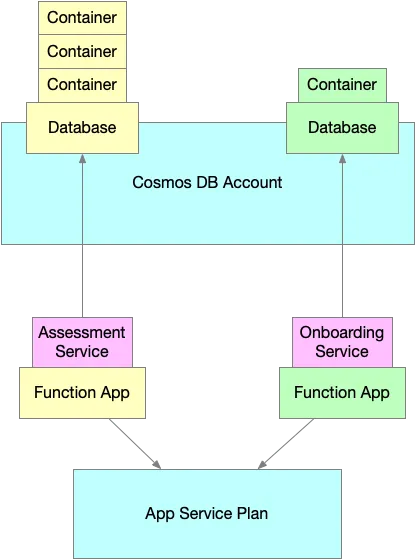

Imagine two microservices: the Assessment Service and the Onboarding Service. Each has separate teams, codebases, and development cycles. Both services are hosted on their own Function Apps across multiple environments — DEV, TEST, and PROD.

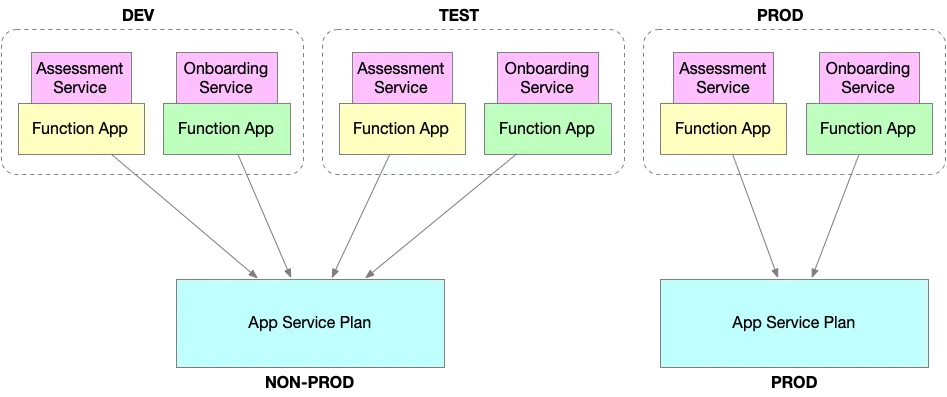

Since these Function Apps depend on the App Service Plan, the shared compute resource must be provisioned first. To optimize cost, DEV and TEST environments might share an App Service Plan in something called NON-PROD, while PROD should have a dedicated one for isolation and performance.

In this structure, the App Service Plan becomes part of shared infrastructure, while each service maintains its own independent deployment pipelines. Shared infrastructure doesn’t necessarily mirror the number of service environments; instead, it consolidates where possible (e.g., combining DEV/TEST), and isolates where necessary (e.g., PROD).

Layering in Data Services with Cosmos DB

This pattern extends to data layers as well. Suppose each service uses Cosmos DB. The best practice in a microservices architecture is to implement a database-per-service model. Each Function App interacts exclusively with its own Cosmos DB database and container set, preventing cross-service data leakage.

Cosmos DB, like Azure Functions, has a shared infrastructure component: the Cosmos DB Account. This account defines scale and replication settings across databases. The databases themselves are then provisioned within this account, scoped per service. This mirrors the Function App → App Service Plan relationship.

Building Root Modules in Terraform

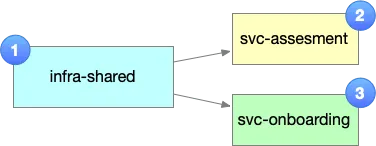

To manage all this in code, we organize the infrastructure into three Terraform root modules:

- Shared Infrastructure Root Module: This module provisions the App Service Plan and the Cosmos DB Account — resources that are shared across services.

- svc-assessment Root Module: Contains everything needed to deploy the Assessment Service, including its Function App, Cosmos DB database, and containers.

- svc-onboarding Root Module: Mirrors the assessment module but handles the Onboarding Service’s deployment and data infrastructure.

This structure gives each service a blast radius boundary. Each can be deployed, modified, or destroyed independently without risk to the others. Importantly, the shared infrastructure is managed in isolation from the services, reinforcing independence and reducing coupling.

Dependency Management in Terraform

Dependencies between root modules are defined through input variables. The svc-assessment and svc-onboardingmodules consume input values that define which App Service Plan and Cosmos DB Account to use. These values are outputs from the shared infrastructure module.

This dependency graph ensures the right sequencing in deployments. You must provision the shared infrastructure first, since the service modules rely on its outputs to function.

Color-Coding the Architecture

To clarify these relationships, the accompanying diagram uses color coding:

- Blue: Resources provisioned by the shared-infra root module (App Service Plan, Cosmos DB Account)

- Yellow: Resources provisioned by the svc-assessment module (Function App, Cosmos DB Database, containers)

- Green: Resources provisioned by the svc-onboarding module (Function App, Cosmos DB Database, containers)

Each colored box in the diagram represents a Terraform root module — a folder containing .tf files. The lines between resources represent both Terraform-level and Azure resource dependencies.

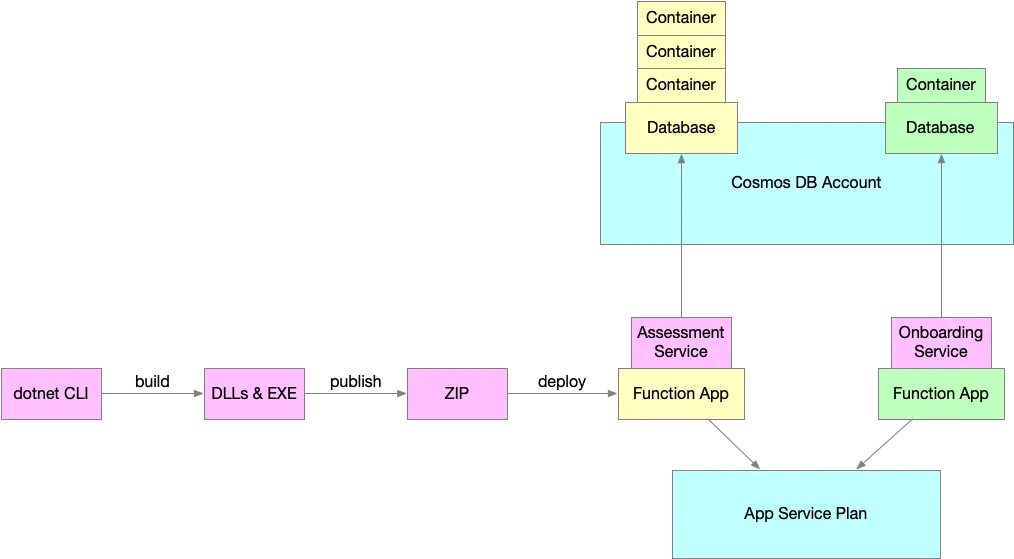

Application Code Deployment Workflow

There is one color from the diagrams that I didn’t mention: Magenta. That’s because it represents the Application code that gets deployed to the Function Apps and it is not provisioned by Terraform — hence there is not Terraform Root Module for it. Application code is deployed independently of the infrastructure. For .NET-based functions, the deployment process uses the dotnet CLI. First, the code is built into DLLs and executables. Then it’s published into a set of deployable artifacts. These artifacts are zipped and deployed to the respective Function App using an external deployment process—separate from Terraform.

Conclusion

Designing infrastructure as code for scalable solutions across teams requires thoughtful root module architecture. By decomposing shared infrastructure and service-specific components into independently deployable Terraform root modules, you gain modularity, control, and clarity. Dependencies are clearly modeled, services are isolated, and teams can move independently.

The result is a resilient, scalable, and maintainable infrastructure architecture — one that aligns with cloud-native principles and supports your organization’s growth without sacrificing reliability or agility.