Taste the Rainbow: Multi-Cloud Style

Terraform has long been celebrated as the infrastructure-as-code tool for the multi-cloud era. But let’s be honest — how many of us actually use it that way? How often do we see a single Terraform deployment stretch across AWS, Azure, and GCP simultaneously? Despite all the marketing buzz, the reality is most Terraform deployments are still single-cloud affairs.

In my book Mastering Terraform, I emphasize principles like module cohesion and minimizing blast radius. But every once in a while, it’s good to break your own rules — especially when it means taking a vibrant dip into the multi-cloud rainbow. So, I set out on a simple but colorful mission: create a global load balancer that distributes traffic across three clouds, each serving its own splash of visual flair.

Check out the code.

The Mission

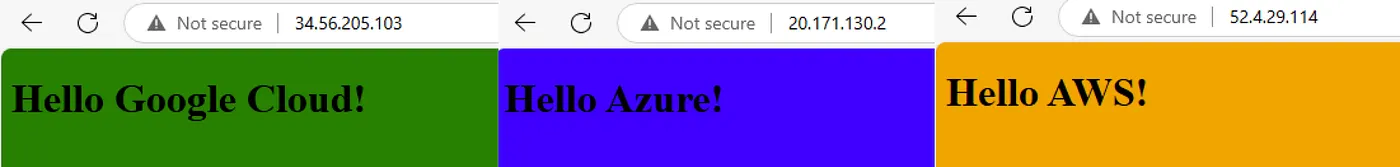

The goal? Deploy a global load-balanced web app spanning AWS, Azure, and GCP. The app itself is a humble static splash page, color-coded by cloud: orange for AWS, blue for Azure, and green for Google Cloud. Google may claim all the primary colors in its logo, but I’m saving red for Oracle. IBM? Sorry, blue is taken. Wink.

Building the Images with Packer

First up, Packer templates to create the VM images for each cloud.

AWS

I find the AWS builder to be the most cryptic. Mainly because of the cumbersome Marketplace Image lookup via the amazon-ami data source.

data "amazon-ami" "ubuntu2404" {

filters = {

virtualization-type = "hvm"

name = "ubuntu/images/hvm-ssd-gp3/ubuntu-noble-24.04-amd64-server-*"

root-device-type = "ebs"

}

owners = ["099720109477"] # Canonical

most_recent = true

region = var.aws_primary_region

}

source "amazon-ebs" "vm" {

region = var.aws_primary_region

ami_name = "${var.image_name}-${var.image_version}"

instance_type = "t2.small"

ssh_username = "ubuntu"

ssh_interface = "public_ip"

communicator = "ssh"

source_ami = data.amazon-ami.ubuntu2404.id

}

Azure

The Azure builder is definitely more complex, mainly because I am using the Shared Image Gallery or the Azure Compute Gallery as a destination for the newly created images rather than just letting them get dumped into a Resource Group.

source "azure-arm" "vm" {

subscription_id = var.subscription_id

tenant_id = var.tenant_id

location = var.azure_primary_location

managed_image_name = "${var.image_name}-${var.image_version}"

managed_image_resource_group_name = var.gallery_resource_group

shared_image_gallery_destination {

subscription = var.subscription_id

resource_group = var.gallery_resource_group

gallery_name = var.gallery_name

image_name = var.image_name

image_version = var.image_version

replication_regions = [

var.azure_primary_location

]

}

use_azure_cli_auth = true

communicator = "ssh"

os_type = "Linux"

image_publisher = "Canonical"

image_offer = "ubuntu-24_04-lts"

image_sku = "ubuntu-pro"

vm_size = "Standard_DS2_v2"

allowed_inbound_ip_addresses = [var.my_ip_address]

}

GCP

Google’s builder is definitely the most spartan — but I like that.

source "googlecompute" "vm" {

project_id = var.gcp_project_id

source_image = "ubuntu-2404-noble-amd64-v20250502a"

ssh_username = "packer"

zone = var.gcp_primary_region

image_name = "${var.image_name}-${replace(var.image_version, ".", "-")}"

}

Provisioning with Style

Each image installs Nginx and serves a custom HTML splash page with a color-coded background.

First I install nginx.

sudo apt-get update

sudo DEBIAN_FRONTEND=noninteractive apt-get install -y nginx

and a simple script provisioner should do the trick.

provisioner "shell" {

execute_command = local.execute_command

script = "./scripts/install-nginx.sh"

}

Then I create a template for the amazing HTML page.

<!DOCTYPE html>

<html>

<head>

<style>

body {

background-color: ;

}

</style>

</head>

<body>

<h1></h1>

</body>

</html>

Yes, I know, my UI building skills are impressive.

Then I write a little bash script to update the HTML file with a custom message and a background color.

#!/bin/bash

set -e

echo "Rendering HTML with COLOR='$CUSTOM_COLOR' and MESSAGE='$CUSTOM_MESSAGE'"

sed -e "s||${CUSTOM_COLOR}|" \

-e "s||${CUSTOM_MESSAGE}|" \

/tmp/index.html.tpl > /var/www/html/index.nginx-debian.html

# Ensure correct permissions

chown www-data:www-data /var/www/html/index.nginx-debian.html

chmod 644 /var/www/html/index.nginx-debian.html

Each cloud gets its own provisioner block with the appropriate color and message:

provisioner "shell" {

execute_command = local.execute_command

script = "./scripts/update-message.sh"

env = {

CUSTOM_MESSAGE = "Hello AWS!"

CUSTOM_COLOR = "orange"

}

only = ["amazon-ebs.vm"]

}

I use the only attribute on the shell provisioner to ensure that each cloud platform gets its own unique parameters passed to the update-message.sh script!

What Region am I in again? Haha just kidding. Oh crap! Did I really just leave it in East US 1?

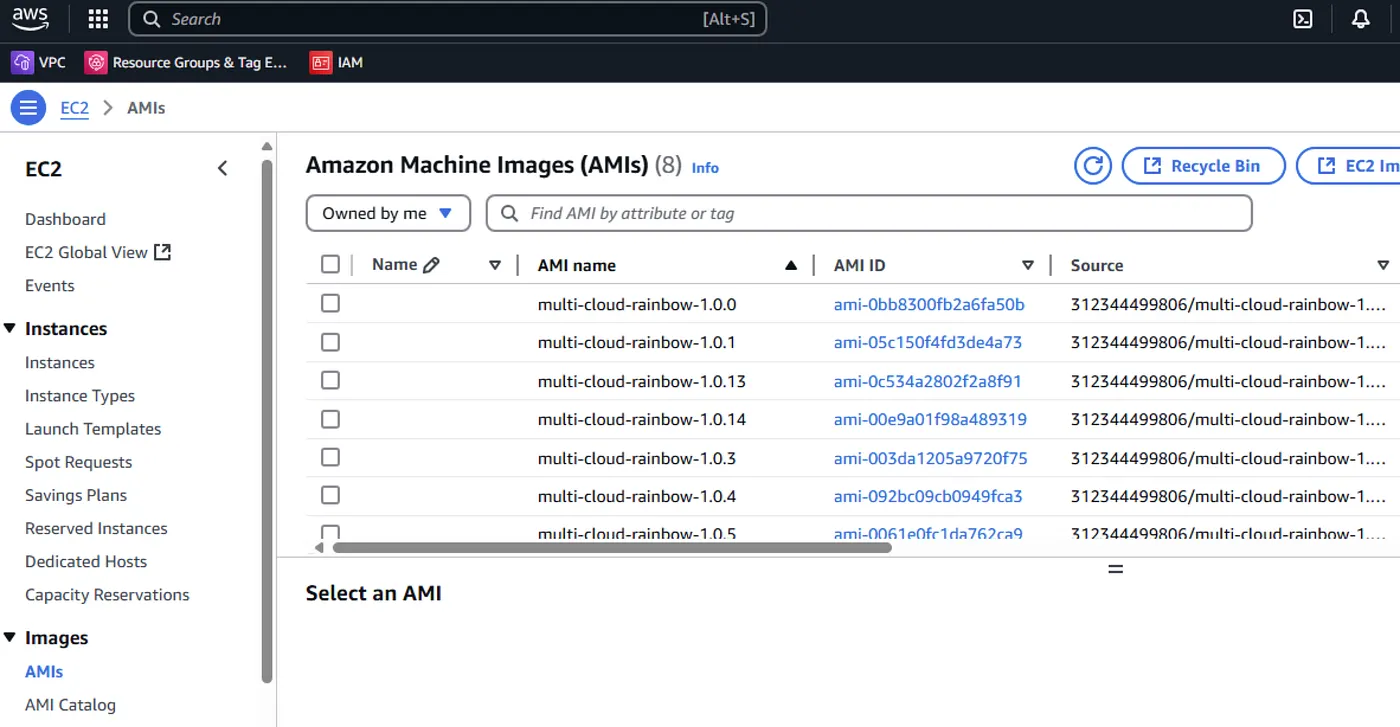

On AWS, virtual machine images are called AMIs — short for Amazon Machine Images (not “Amy’s,” though I sometimes wonder myself). Unfortunately, they tend to get dumped into a flat list within the region they’re built in, with no built-in structure or grouping. It’s functional, but definitely not elegant.

Azure

provisioner "shell" {

execute_command = local.execute_command

script = "./scripts/update-message.sh"

env = {

CUSTOM_MESSAGE = "Hello Azure!"

CUSTOM_COLOR = "blue"

}

only = ["azure-arm.vm"]

}

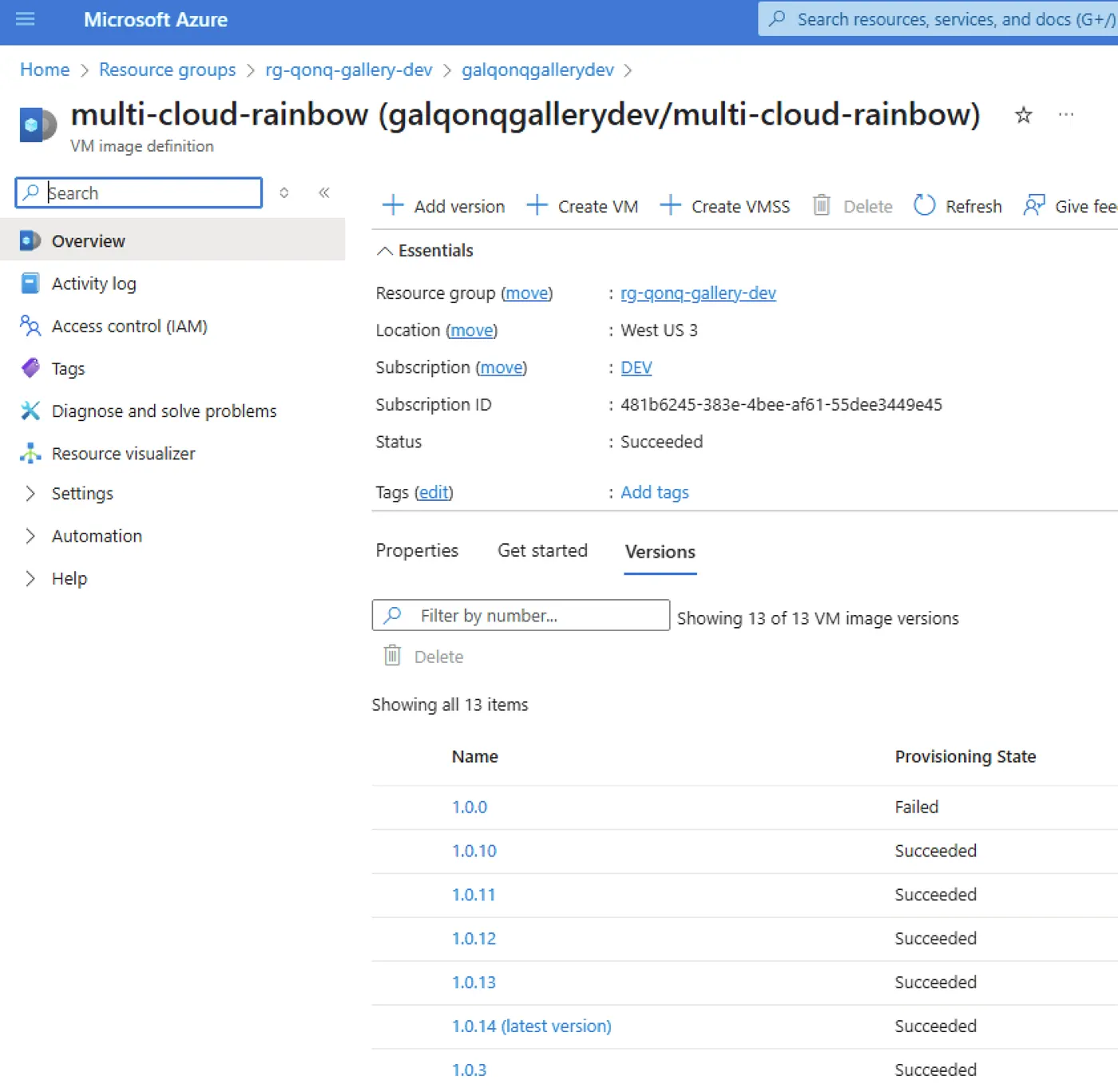

On Azure, things work a bit differently. I’m using the Azure Compute Gallery, which lets you group related virtual machine images and replicate them across regions with ease. While it’s not strictly required, I always use it when building images with Packer — it just makes the process more organized and scalable.

Google Cloud

provisioner "shell" {

execute_command = local.execute_command

script = "./scripts/update-message.sh"

env = {

CUSTOM_MESSAGE = "Hello Google Cloud!"

CUSTOM_COLOR = "green"

}

only = ["googlecompute.vm"]

}

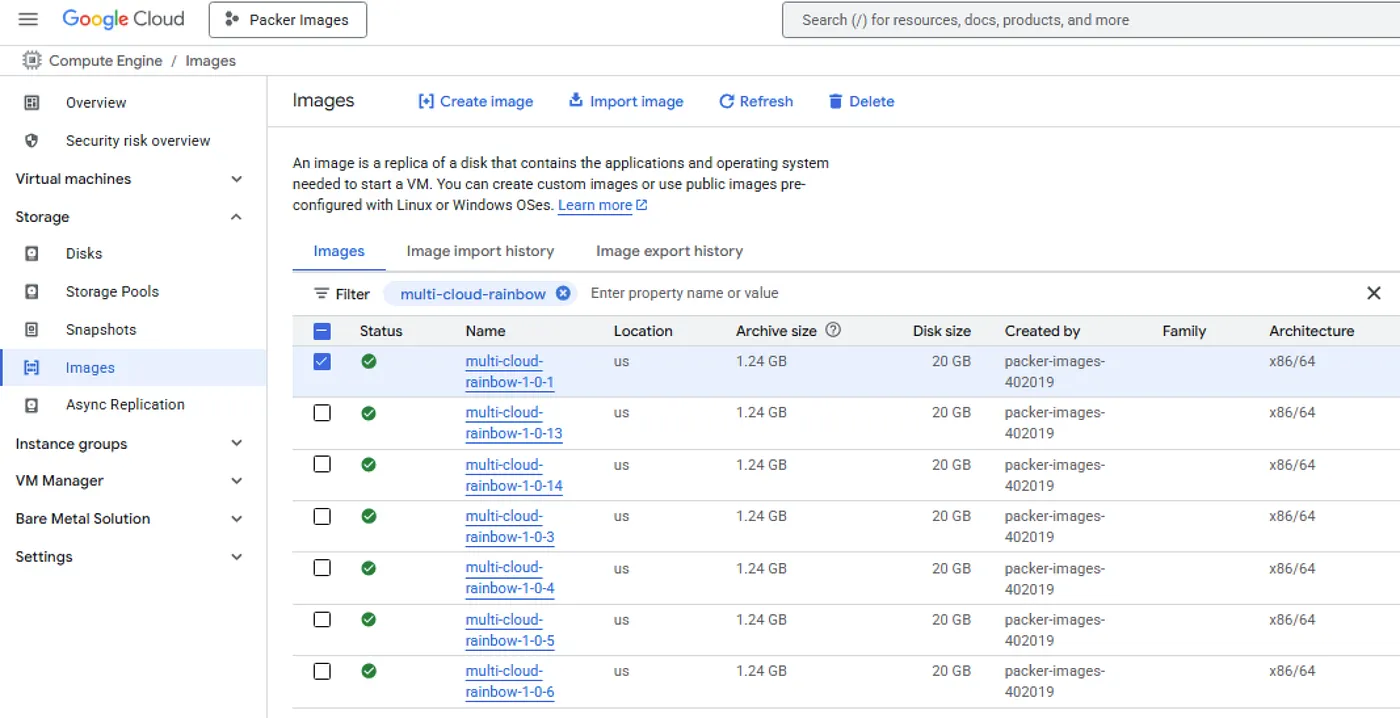

As you can see, Google Cloud images are built and stored in a separate project, which I’ve named “Packer Images.” Within that project, the VM images are presented in a way that resembles AWS’s approach — a straightforward list of raw AMIs.

Terraforming Across Clouds

With our images built for each of the three clouds, it’s time to Terraform some environments. Each one will follow the same basic structure: a simple network and a virtual machine. To keep things clean and consistent, I’ve created modules that encapsulate the cloud-specific code needed to provision equivalent resources on each platform.

Before diving into the modules, though, we need to establish a workspace on each cloud to organize the environment. This setup varies by provider, reflecting their different design philosophies. Azure and GCP offer clear organizational units — Resource Groups and Projects, respectively — while AWS takes a more loosely coupled approach, relying on tagging strategies to group related resources.

AWS

AWS has a Resource Group, well not really.

resource "aws_resourcegroups_group" "main" {

name = "${var.application_name}-${random_string.random_string.result}"

resource_query {

type = "TAG_FILTERS_1_0"

query = jsonencode({

ResourceTypeFilters = [

"AWS::EC2::Instance"

]

TagFilters = [

{

Key = "Application"

Values = [var.application_name]

},

{

Key = "Environment"

Values = [random_string.random_string.result]

}

]

})

}

}

And then I reference my image:

data "aws_ami" "main" {

most_recent = true

filter {

name = "name"

values = ["${var.image_name}-${var.image_version}"]

}

owners = ["self"]

}

Azure

Azure I have a Resource Group. For real this time.

resource "azurerm_resource_group" "rg" {

name = "rg-${var.application_name}-${random_string.random_string.result}"

location = var.azure_region

}

Then I reference my machine image.

data "azurerm_shared_image_version" "main" {

name = var.image_version

image_name = var.image_name

gallery_name = "galqonqgallerydev"

resource_group_name = "rg-qonq-gallery-dev"

}

Google Cloud

Google Cloud I have a project.

data "google_billing_account" "main" {

display_name = "Default"

lookup_projects = false

}

resource "google_project" "main" {

name = "${var.application_name}-${random_string.random_string.result}"

project_id = "${var.application_name}-${random_string.random_string.result}"

org_id = var.gcp_organization

billing_account = data.google_billing_account.main.id

}

resource "google_project_service" "compute" {

project = google_project.main.project_id

service = "compute.googleapis.com"

timeouts {

create = "30m"

update = "40m"

}

disable_dependent_services = true

}

But I also need to reference the Billing Account and enable the Google Compute Engine (GCE) API. It’s a hazing ritual on Google Cloud — don’t pay it much mind.

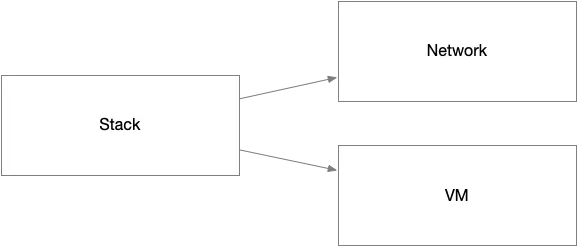

A Cloud Abstraction Layer

To instantiate the environments, I followed a common pattern built around three core modules: a Network module, a VM module, and a Stack module. The Stack module acts as a wrapper that brings together the Network and VM modules, forming a complete deployment unit for each cloud. This structure provides a clear separation of concerns while making the full workload for each platform easy to manage and replicate.

There is a little bit of effort to select an Availability Zone.

data "aws_availability_zones" "available" {

state = "available"

}

resource "random_shuffle" "az" {

input = data.aws_availability_zones.available.names

result_count = 1

}

This code shows how the AWS modules are designed with a level of abstraction that avoids cloud-specific terminology, making them easy to replicate across other providers like Azure and GCP. The network module provisions networking resources, using inputs like name, address_space, and an availability zone chosen via random_shuffle, without referencing AWS-specific constructs directly.

The vm module similarly abstracts away cloud-specific details by taking generic inputs like subnet_id, vm_image_id, and vm_size, and wiring them together in a reusable way. Because of this abstraction, equivalent modules for Azure and GCP can follow the same structure and interface, enabling a consistent and portable module design across all three clouds.

module "network" {

source = "../../network/aws"

name = var.name

address_space = var.address_space

availability_zone = random_shuffle.az.result[0]

tags = var.tags

}

module "vm" {

source = "../../vm/aws"

name = var.name

subnet_id = module.network.subnet_id

vm_image_id = var.vm_image_id

vm_size = var.vm_size

ssh_public_key = var.ssh_public_key

ssh_private_key = var.ssh_private_key

security_group_id = module.network.security_group_id

tags = var.tags

username = var.username

}

I organized the module folder structure like this:

- modules

- network

- aws

- azure

- gcp

- stack

- aws

- azure

- gcp

- vm

- aws

- azure

- gcp

Each version of the module follows a consistent pattern, with interfaces that are nearly identical across clouds. If I wanted to, I could have enforced strict uniformity across the Network, VM, and Stack module sets — ensuring that the root module could interact with any of them in exactly the same way, regardless of the underlying cloud. Honestly, isn’t that what we all imagined Terraform would let us do from the start? I know I did.

The Crown Jewel: Azure Front Door

To tie it all together, I chose Azure Front Door as the global load balancer. Of course I chose Azure Front Door. You didn’t think I was gonna pick some other cloud’s global load balancer did you? Front Door lives in its own Azure Resource Group to stay separate from the Azure workload environment.

Here’s how it all comes together: Profile Endpoint Origin Group Origins from each cloud Route to bind it all

I set it up in its own Resource Group so as not to mix it up with the Azure workload environment.

resource "azurerm_resource_group" "global" {

name = "rg-${var.application_name}-${random_string.random_string.result}-global"

location = var.azure_region

}

resource "azurerm_cdn_frontdoor_profile" "main" {

name = "afd-${var.application_name}-${random_string.random_string.result}"

resource_group_name = azurerm_resource_group.global.name

sku_name = "Standard_AzureFrontDoor"

}

resource "azurerm_cdn_frontdoor_endpoint" "main" {

name = "fde-${var.application_name}-${random_string.random_string.result}"

cdn_frontdoor_profile_id = azurerm_cdn_frontdoor_profile.main.id

}

resource "azurerm_cdn_frontdoor_origin_group" "multi_cloud" {

name = "multi-cloud-group"

cdn_frontdoor_profile_id = azurerm_cdn_frontdoor_profile.main.id

session_affinity_enabled = false

load_balancing {

sample_size = 4

successful_samples_required = 3

}

health_probe {

protocol = "Http"

interval_in_seconds = 30

path = "/"

request_type = "GET"

}

}

For Frontdoor you need a Profile, an Endpoint, and an Origin Group. This code sets up Azure Front Door for global load balancing.

Then I define my three origins — one for each cloud:

resource "azurerm_cdn_frontdoor_origin" "aws" {

name = "aws-origin"

host_name = module.aws_stack.public_ip_address

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.multi_cloud.id

certificate_name_check_enabled = false

enabled = true

http_port = 80

https_port = 443

}

resource "azurerm_cdn_frontdoor_origin" "azure" {

name = "azure-origin"

host_name = module.azure_stack.public_ip_address

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.multi_cloud.id

certificate_name_check_enabled = false

enabled = true

http_port = 80

https_port = 443

}

resource "azurerm_cdn_frontdoor_origin" "gcp" {

name = "gcp-origin"

host_name = module.gcp_stack.public_ip_address

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.multi_cloud.id

certificate_name_check_enabled = false

enabled = true

http_port = 80

https_port = 443

}

And finally bring it all together with a route.

resource "azurerm_cdn_frontdoor_route" "global_route" {

name = "fder-${var.application_name}-${random_string.random_string.result}"

cdn_frontdoor_endpoint_id = azurerm_cdn_frontdoor_endpoint.main.id

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.multi_cloud.id

supported_protocols = ["Http"]

patterns_to_match = ["/*"]

forwarding_protocol = "HttpOnly"

https_redirect_enabled = false

cdn_frontdoor_origin_ids = [

azurerm_cdn_frontdoor_origin.aws.id,

azurerm_cdn_frontdoor_origin.azure.id,

azurerm_cdn_frontdoor_origin.gcp.id

]

enabled = true

}

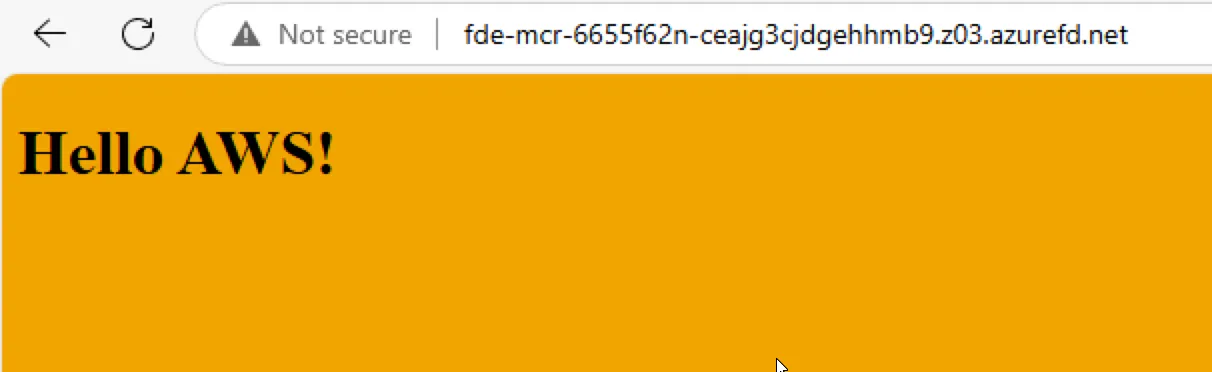

Now, each cloud instance is independently accessible by IP. But when I access the Azure Front Door endpoint, traffic is routed to the nearest and fastest origin — thanks to Front Door’s smart routing.

But from the Frontdoor endpoint I get routed to whoever Frontdoor thinks will be faster based on my location:

I probably should have done a more thoughtful job of selecting regions so that Azure would be the one selected by Azure Front Door but that’s the way it goes when you taste the multi-cloud rainbow!!!

Conclusion

This experiment was equal parts fun and enlightening. It reminded me of what we all imagined Terraform could be when we first heard the term multi-cloud. It’s entirely possible to build cloud-agnostic modules, abstract them cleanly, and orchestrate them under one roof — or one big, beautiful, multi-cloud rainbow, if you will. Sure, there are more elegant ways to tune region selection or handle resiliency on each cloud platform. But sometimes, it’s worth breaking the mold just to see what’s possible.

So if you’re wondering whether Terraform can really do multi-cloud in a single deployment, have a little fun and taste the rainbow, the multi-cloud rainbow, that is.